sparsity_causal_simulations

import pandas as pd

In this post, I’ll introduce the econml python package and use it to compare double machine learning and doubly robust learning. I’ll look at whether bootstrapping standard errors improves the coverage of confidence intervals, and I’ll also look at whether sample size influences estimation accuracy.

I. Introduction to econml

The econml package was developed by the ALICE team at microsoft research to facilitate estimation of causal effects. This package builds upon scikit-learn and presents several different algorithms for causal inference in one unified framework.

One of the main reasons to use econml is its integration with scikit-learn. Because econml uses scikit-learn style estimators under the hood, it is easy to evaluate how a model would do if a different estimation style were used by simply swapping out one line in the code.

Another big reason to use econml is that estimators defined in econml all inherit from the same base class. In practice, this means that the methods used to fit models and present treatment effects are the same, regardless of which estimator you are using. So, in this post, switching between double machine learning and doubly robust machine learning is very simple–no additional documentation reading is needed.

II. Brief introduction to double machine and doubly robust learning.

Doubly robust machine learning involves fitting two models: one model to predict the treatment X from possible confounders, and another model to predict the outcome Y from X as well as possible confounders. One of the parameters estimated in the second model is used to estimate the average treatment effect of X on Y. This method was developed by Robins et al. in 1994, and has been frequently been used under the name inverse probability weighting. For more on doubly robust machine learning, see this blog post.

Double machine learning involves fitting two models: one model to predict X from confounders and another model to predict Y from the confounders. The residuals of the two models are then used to generate estimates of the causal effect of X on Y. I wrote this post on double machine learning for more of an overview.

III. Data generation.

For this simulation, I generated data in a similar method as my previous blog post. However, I converted x to a binary variable because doubly robust learning only works with categorical variables.

import numpy as np

import pandas as pd

def generate_data(n = 400, n_confounders = 10,

n_possible_confounders = 300,

causal_effect_size = 2,

beta_c_x = None, beta_c_y = 1,

sd_x_error = None,

sd_confounders = 1):

if beta_c_x is None:

beta_c_x = np.sqrt(1/(n_confounders + 1)) #this lets our x have a variance of 1

if sd_x_error is None:

sd_x_error = np.sqrt(1/(n_confounders + 1)) #same as above

zero_sequence = np.zeros(n) # a sequence of zeroes the length of our model

data_dict = dict()

x = m1 = m2 = zero_sequence

for i in range(n_confounders): # We will have some variables that are true confounders

c = np.random.normal(size = n, scale = sd_confounders) # Create some variance in the confounder

m1 = m1 + c*beta_c_x

m2 = m2 + c*beta_c_y

data_dict["c" + str(i)] = c

for i in range(n_confounders,n_possible_confounders): #because we are testing sparsity, the rest of the variables will have zero effect.

c = np.random.normal(size = n, scale = sd_confounders)

data_dict["fc" + str(i)] = c #label them as fake

x = ((np.random.normal(size = n, scale = sd_x_error) + m1 ) > 0) * 1 #convert to binary to make more estimation methods

y = x * causal_effect_size + m2 + np.random.normal(size = n, scale = np.std(m2) /10)

data_dict["x"] = x #replaces the column of zeroes we made before

data_dict["y"] = y

output_df = pd.DataFrame(data_dict)

return output_df

Next, I defined a function to generate an estimate of the average treatment effect from a simulated dataset. As you can see, I can pass in either a LinearDRLearner or a LinearDML object to the function and use the same methods on the object to get simulated effects.

Both methods generate confidence intervals using traditional statistical techniques (“statsmodels”) or with bootstrapping. Here, I allow my function to conduct estimation under both techniques.

from econml.dr import LinearDRLearner

from econml.dml import LinearDML

estimators_to_test = {

"dml_linear_bootstrap": [LinearDML(), "bootstrap"],

"dml_linear_statsmodels": [LinearDML(), "statsmodels"],

"dr_linear_bootstrap": [LinearDRLearner(), "bootstrap"],

"dr_linear_statsmodels": [LinearDRLearner(), "statsmodels"]

}

def get_econml_estimate(sim_df, est, inference_method = "bootstrap", sample_size = 0, estimator_name = "", alpha = 0.05):

est.fit(sim_df["y"], T = sim_df["x"], W=sim_df.drop(["x","y"], axis = 1), inference = inference_method)

lb, ub = est.ate_interval(alpha=alpha) # OLS confidence intervals

point_estimate = est.ate()

return {"lower": lb, "estimate": point_estimate, "upper": ub, "n": sample_size, "estimator_name": estimator_name}

I’ll also define a function to get effects using a standard linear model.

import statsmodels.api as sm

def get_lm_interval(sim_df, sample_size = 0, alpha = 0.05):

y = sim_df["y"]

#sim_df["interaction"] = sim_df["moderator"] * sim_df["x"]

x = sim_df.drop("y", axis = 1)

ols_model = sm.OLS(y, x)

ols_results = ols_model.fit()

results_df = pd.DataFrame(ols_results.summary(alpha = alpha).tables[1].data)

results_df.index = results_df[0]

return {"lower": float(results_df.loc["x",5]),

"upper": float(results_df.loc["x",6]),

"estimate": float(results_df.loc["x",1]),

"n": sample_size,

"estimator_name": "standard_linear"

}

def get_lm_interval_small(sim_df, sample_size = 0, alpha = 0.05):

y = sim_df["y"]

x = sim_df.drop(["y"] + [col for col in sim_df.columns if col.startswith("f")], axis = 1)

ols_model = sm.OLS(y, x)

ols_results = ols_model.fit()

results_df = pd.DataFrame(ols_results.summary(alpha = alpha).tables[1].data)

results_df.index = results_df[0]

return {"lower": float(results_df.loc["x",5]),

"upper": float(results_df.loc["x",6]),

"estimate": float(results_df.loc["x",1]),

"n": sample_size,

"estimator_name": "standard_linear_small"

}

import warnings

warnings.filterwarnings('ignore')

import pickle

all_estimates = []

num_reps = 50

for sample_size in [400, 1600, 6400]:

print(sample_size)

for i in range(num_reps):

sim_df = generate_data(sample_size)

for estimator_name in estimators_to_test.keys():

estimate_dict = get_econml_estimate(sim_df,

estimators_to_test[estimator_name][0],

estimators_to_test[estimator_name][1],

sample_size = sample_size,

estimator_name = estimator_name)

all_estimates.append(estimate_dict)

all_estimates.append(get_lm_interval_small(sim_df, sample_size = sample_size))

all_estimates.append(get_lm_interval(sim_df, sample_size = sample_size))

with open('simulations_4_feb.pickle', 'wb') as handle:

pickle.dump(all_estimates, handle)

400

1600

6400

import pickle

with open('all_estimates_4_feb.pickle', 'wb') as handle:

pickle.dump(all_estimates, handle, protocol=pickle.HIGHEST_PROTOCOL)

all_estimates_df = pd.DataFrame(all_estimates)

all_estimates_df["width"] = all_estimates_df["upper"] - all_estimates_df["lower"]

all_estimates_df.head(10)

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

all_estimates_df.to_pickle("all_estimates_5_feb.pkl")

import pandas as pd

all_estimates_df = pd.read_pickle("all_estimates_5_feb.pkl")

First, let’s look at whether different estimators are biased.

The bias of an estimator d is defined as the expected value of the estimator minus the true parameter value:

$$E(\hat{d} - d)$$

For an estimator to be useful, the estimator must have zero bias. If an estimator systematically delivers high or low estimates, that estimator is useless. In the presence of confounding (such as in this situation), it is especially important for the estimator to eliminate bias which originates from confounders.

import numpy as np

## Bias

def bias(x):

return np.mean(x) - 2

def mse(x):

return np.mean((np.array(x) - 2) * (np.array(x) - 2))

def sd(x):

return np.std(x)

bias_df = all_estimates_df.groupby(["estimator_name", "n"])["estimate"].agg(

[bias, sd]

)

bias_df["bias_sd"] = np.round(bias_df["bias"]).astype(str) + np.round(bias_df["sd"]).astype(str)

#

bias_df["bias_sd"] = np.round(bias_df["bias"], 3).astype(str) + " (" + np.round(bias_df["sd"]/ np.sqrt(50), 3).astype(str) + ")"

| A | B | |

|---|---|---|

| a | 1 | 1 |

| a | 2 | 2 |

| b | 3 | 3 |

bias_df = bias_df.reset_index().pivot(index = "estimator_name", columns = "n", values = "bias_sd").reset_index().loc[[0, 2, 4],:]

bias_df["Name"] = ["Double Machine Learning", "Doubly Robust Estimation", "Standard Linear Model"]

bias_df.drop("estimator_name", axis = 1, inplace = True)

bias_df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

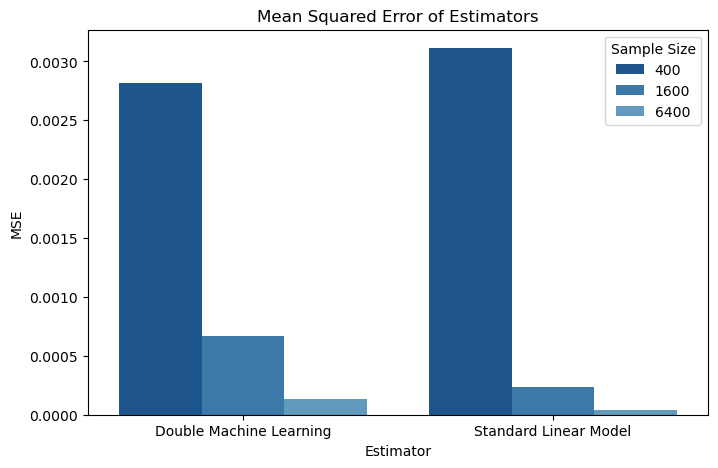

Next, let’s look at mean squared error, or how close estimates tend to be to the actual parameter value. An estimator with a lower mean squared error is more useful than an estimator with a higher mean squared error because it will estimate the parameter of interest with more precision.

Mean squared error is defined as:

$$E[(\hat{d} - d)^2]$$

import numpy as np

## mean squared error

mse_df = all_estimates_df.groupby(["estimator_name", "n"])["estimate"].agg([mse]).reset_index()

translation_dict = {"dml_linear_bootstrap": "Double Machine Learning (Bootstrap)",

"dml_linear_statsmodels": "Double Machine Learning (Bootstrap)",

"dr_linear_bootstrap": "Doubly Robust Estimation (Bootstrap)",

"dr_linear_statsmodels": "Doubly Robust Estimation (Bootstrap)",

"standard_linear": "Standard Linear Model"

}

translation_dict = {"dml_linear_bootstrap": "Double Machine Learning",

"dml_linear_statsmodels": "Double Machine Learning",

"dr_linear_bootstrap": "Doubly Robust Estimation",

"dr_linear_statsmodels": "Doubly Robust Estimation",

"standard_linear": "Standard Linear Model"

}

mse_df = mse_df.loc[mse_df["estimator_name"].isin(

["dml_linear_statsmodels", "dr_linear_statsmodels", "standard_linear"]),:]

mse_df["Estimator"] = mse_df["estimator_name"].apply(lambda x: translation_dict[x])

import seaborn as sns

import matplotlib.pyplot as plt

mse_df["MSE"] = mse_df["mse"]

mse_df["Sample Size"] = mse_df["n"]

sns.barplot(data=mse_df.loc[ (mse_df["estimator_name"] != "dr_linear_statsmodels"), :],

x="Estimator", y="MSE", hue="Sample Size",

palette = sns.color_palette("Blues_r"))

plt.title("Mean Squared Error of Estimators")

plt.show()

mse_df["mse"] = mse_df["mse"].astype(float).round(6).astype(str)

mse_df.pivot(index = "Name", columns = "n", values = "mse").reset_index()

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

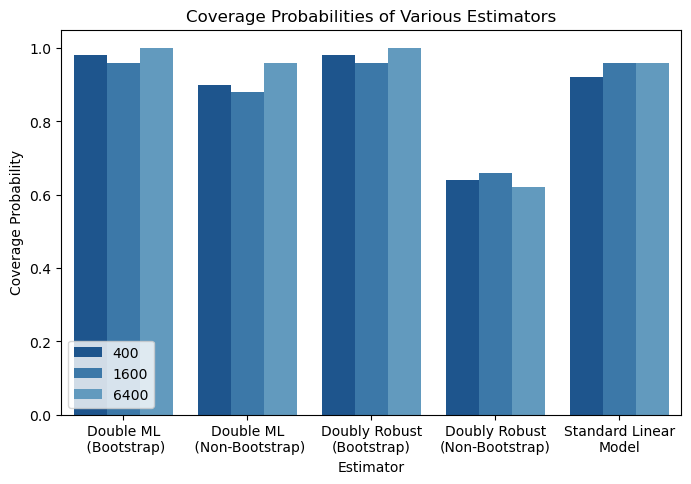

Finally, let’s look at coverage probabilities. We calculated 95% confidence intervals for our estimates using both bootstrap estimation and estimation based on a known distribution. In bootstrap variance estimation, the dataset is duplicated many times by sampling with replacement and the quantiles from the distribution of these estimations are used to determine confidence intervals. In estimation based on a known distribution, statistical theory is used to determine what the variance of the estimate should be, and confidence intervals are generated from the normal or t distribution.

translation_dict = {"dml_linear_bootstrap": "Double ML\n (Bootstrap)",

"dml_linear_statsmodels": "Double ML\n (Non-Bootstrap)",

"dr_linear_bootstrap": "Doubly Robust\n(Bootstrap)",

"dr_linear_statsmodels": "Doubly Robust\n(Non-Bootstrap)",

"standard_linear": "Standard Linear\nModel"

}

import numpy as np

## coverage

all_estimates_df["covered"] = (all_estimates_df["upper"] > 2) & (all_estimates_df["lower"] < 2)

coverage_df = all_estimates_df.groupby(["estimator_name", "n"])["covered"].agg("mean").reset_index()

coverage_df = coverage_df.loc[coverage_df["estimator_name"] != "standard_linear_small"]

coverage_df

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}

coverage_df["Estimator"] = coverage_df["estimator_name"].apply(lambda x: translation_dict[x])

coverage_df["Sample Size"] = coverage_df["n"]

coverage_df["Coverage Probability"] = coverage_df["covered"]

dir(ax)

['ArtistList',

'_AxesBase__clear',

'_PROPERTIES_EXCLUDED_FROM_SET',

'__class__',

'__delattr__',

'__dict__',

'__dir__',

'__doc__',

'__eq__',

'__format__',

'__ge__',

'__getattribute__',

'__getstate__',

'__gt__',

'__hash__',

'__init__',

'__init_subclass__',

'__le__',

'__lt__',

'__module__',

'__ne__',

'__new__',

'__reduce__',

'__reduce_ex__',

'__repr__',

'__setattr__',

'__setstate__',

'__sizeof__',

'__str__',

'__subclasshook__',

'__weakref__',

'_add_text',

'_adjustable',

'_agg_filter',

'_alias_map',

'_alpha',

'_anchor',

'_animated',

'_aspect',

'_autotitlepos',

'_axes',

'_axes_class',

'_axes_locator',

'_axis_map',

'_axis_names',

'_axisbelow',

'_box_aspect',

'_callbacks',

'_check_no_units',

'_children',

'_clipon',

'_clippath',

'_cm_set',

'_colorbars',

'_convert_dx',

'_current_image',

'_default_contains',

'_deprecate_noninstance',

'_errorevery_to_mask',

'_facecolor',

'_fill_between_x_or_y',

'_frameon',

'_fully_clipped_to_axes',

'_gci',

'_gen_axes_patch',

'_gen_axes_spines',

'_get_aspect_ratio',

'_get_lines',

'_get_pan_points',

'_get_patches_for_fill',

'_get_view',

'_gid',

'_gridOn',

'_in_layout',

'_init_axis',

'_internal_update',

'_label',

'_label_outer_xaxis',

'_label_outer_yaxis',

'_left_title',

'_make_twin_axes',

'_mouseover',

'_mouseover_set',

'_navigate',

'_navigate_mode',

'_originalPosition',

'_parse_scatter_color_args',

'_path_effects',

'_pcolor_grid_deprecation_helper',

'_pcolorargs',

'_picker',

'_position',

'_prepare_view_from_bbox',

'_process_unit_info',

'_projection_init',

'_quiver_units',

'_rasterization_zorder',

'_rasterized',

'_remove_legend',

'_remove_method',

'_request_autoscale_view',

'_right_title',

'_sci',

'_set_alpha_for_array',

'_set_artist_props',

'_set_gc_clip',

'_set_lim_and_transforms',

'_set_position',

'_set_title_offset_trans',

'_set_view',

'_set_view_from_bbox',

'_shared_axes',

'_sharex',

'_sharey',

'_sketch',

'_snap',

'_stale',

'_stale_viewlims',

'_sticky_edges',

'_subclass_uses_cla',

'_subplotspec',

'_tight',

'_transform',

'_transformSet',

'_twinned_axes',

'_unit_change_handler',

'_unstale_viewLim',

'_update_image_limits',

'_update_line_limits',

'_update_patch_limits',

'_update_props',

'_update_set_signature_and_docstring',

'_update_title_position',

'_update_transScale',

'_url',

'_use_sticky_edges',

'_validate_converted_limits',

'_viewLim',

'_visible',

'_xaxis_transform',

'_xmargin',

'_yaxis_transform',

'_ymargin',

'acorr',

'add_artist',

'add_callback',

'add_child_axes',

'add_collection',

'add_container',

'add_image',

'add_line',

'add_patch',

'add_table',

'angle_spectrum',

'annotate',

'apply_aspect',

'arrow',

'artists',

'autoscale',

'autoscale_view',

'axes',

'axhline',

'axhspan',

'axis',

'axison',

'axline',

'axvline',

'axvspan',

'bar',

'bar_label',

'barbs',

'barh',

'bbox',

'boxplot',

'broken_barh',

'bxp',

'callbacks',

'can_pan',

'can_zoom',

'child_axes',

'cla',

'clabel',

'clear',

'clipbox',

'cohere',

'collections',

'containers',

'contains',

'contains_point',

'contour',

'contourf',

'convert_xunits',

'convert_yunits',

'csd',

'dataLim',

'drag_pan',

'draw',

'draw_artist',

'end_pan',

'errorbar',

'eventplot',

'figure',

'fill',

'fill_between',

'fill_betweenx',

'findobj',

'fmt_xdata',

'fmt_ydata',

'format_coord',

'format_cursor_data',

'format_xdata',

'format_ydata',

'get_adjustable',

'get_agg_filter',

'get_alpha',

'get_anchor',

'get_animated',

'get_aspect',

'get_autoscale_on',

'get_autoscalex_on',

'get_autoscaley_on',

'get_axes_locator',

'get_axisbelow',

'get_box_aspect',

'get_children',

'get_clip_box',

'get_clip_on',

'get_clip_path',

'get_cursor_data',

'get_data_ratio',

'get_default_bbox_extra_artists',

'get_facecolor',

'get_fc',

'get_figure',

'get_frame_on',

'get_gid',

'get_gridspec',

'get_images',

'get_in_layout',

'get_label',

'get_legend',

'get_legend_handles_labels',

'get_lines',

'get_mouseover',

'get_navigate',

'get_navigate_mode',

'get_path_effects',

'get_picker',

'get_position',

'get_rasterization_zorder',

'get_rasterized',

'get_renderer_cache',

'get_shared_x_axes',

'get_shared_y_axes',

'get_sketch_params',

'get_snap',

'get_subplotspec',

'get_tightbbox',

'get_title',

'get_transform',

'get_transformed_clip_path_and_affine',

'get_url',

'get_visible',

'get_window_extent',

'get_xaxis',

'get_xaxis_text1_transform',

'get_xaxis_text2_transform',

'get_xaxis_transform',

'get_xbound',

'get_xgridlines',

'get_xlabel',

'get_xlim',

'get_xmajorticklabels',

'get_xminorticklabels',

'get_xscale',

'get_xticklabels',

'get_xticklines',

'get_xticks',

'get_yaxis',

'get_yaxis_text1_transform',

'get_yaxis_text2_transform',

'get_yaxis_transform',

'get_ybound',

'get_ygridlines',

'get_ylabel',

'get_ylim',

'get_ymajorticklabels',

'get_yminorticklabels',

'get_yscale',

'get_yticklabels',

'get_yticklines',

'get_yticks',

'get_zorder',

'grid',

'has_data',

'have_units',

'hexbin',

'hist',

'hist2d',

'hlines',

'ignore_existing_data_limits',

'images',

'imshow',

'in_axes',

'indicate_inset',

'indicate_inset_zoom',

'inset_axes',

'invert_xaxis',

'invert_yaxis',

'is_transform_set',

'label_outer',

'legend',

'legend_',

'lines',

'locator_params',

'loglog',

'magnitude_spectrum',

'margins',

'matshow',

'minorticks_off',

'minorticks_on',

'mouseover',

'name',

'patch',

'patches',

'pchanged',

'pcolor',

'pcolorfast',

'pcolormesh',

'phase_spectrum',

'pick',

'pickable',

'pie',

'plot',

'plot_date',

'properties',

'psd',

'quiver',

'quiverkey',

'redraw_in_frame',

'relim',

'remove',

'remove_callback',

'reset_position',

'scatter',

'secondary_xaxis',

'secondary_yaxis',

'semilogx',

'semilogy',

'set',

'set_adjustable',

'set_agg_filter',

'set_alpha',

'set_anchor',

'set_animated',

'set_aspect',

'set_autoscale_on',

'set_autoscalex_on',

'set_autoscaley_on',

'set_axes_locator',

'set_axis_off',

'set_axis_on',

'set_axisbelow',

'set_box_aspect',

'set_clip_box',

'set_clip_on',

'set_clip_path',

'set_facecolor',

'set_fc',

'set_figure',

'set_frame_on',

'set_gid',

'set_in_layout',

'set_label',

'set_mouseover',

'set_navigate',

'set_navigate_mode',

'set_path_effects',

'set_picker',

'set_position',

'set_prop_cycle',

'set_rasterization_zorder',

'set_rasterized',

'set_sketch_params',

'set_snap',

'set_subplotspec',

'set_title',

'set_transform',

'set_url',

'set_visible',

'set_xbound',

'set_xlabel',

'set_xlim',

'set_xmargin',

'set_xscale',

'set_xticklabels',

'set_xticks',

'set_ybound',

'set_ylabel',

'set_ylim',

'set_ymargin',

'set_yscale',

'set_yticklabels',

'set_yticks',

'set_zorder',

'sharex',

'sharey',

'specgram',

'spines',

'spy',

'stackplot',

'stairs',

'stale',

'stale_callback',

'start_pan',

'stem',

'step',

'sticky_edges',

'streamplot',

'table',

'tables',

'text',

'texts',

'tick_params',

'ticklabel_format',

'title',

'titleOffsetTrans',

'transAxes',

'transData',

'transLimits',

'transScale',

'tricontour',

'tricontourf',

'tripcolor',

'triplot',

'twinx',

'twiny',

'update',

'update_datalim',

'update_from',

'use_sticky_edges',

'viewLim',

'violin',

'violinplot',

'vlines',

'xaxis',

'xaxis_date',

'xaxis_inverted',

'xcorr',

'yaxis',

'yaxis_date',

'yaxis_inverted',

'zorder']

ax.get_xticklabels()

[Text(0, 0, 'Double ML\n (Bootstrap)'),

Text(1, 0, 'Double ML\n (Non-Bootstrap)'),

Text(2, 0, 'Doubly Robust\n(Bootstrap)'),

Text(3, 0, 'Doubly Robust\n(Non-Bootstrap)'),

Text(4, 0, 'Standard Linear\nModel')]

import seaborn as sns

import matplotlib.pyplot as plt

import matplotlib

matplotlib.rcParams['figure.figsize'] = [8, 5]

ax = sns.barplot(data=coverage_df,

x="Estimator", y="Coverage Probability", hue="Sample Size",

palette = sns.color_palette("Blues_r"))

plt.legend(loc='lower left')

plt.title("Coverage Probabilities of Various Estimators")

#ax.set_xticklabels(ax rotation=0, fontsize=8)

plt.show()

To summarize, here are my main conclusions from this simulation:

- econml is a great package for causal inference

- Bootstrapping can be used to generate accurate coverage probabilities even when traditional statistical estimation fails

- Double Machine Learning out-performs doubly robust estimation in conditions of sparsity and low sample size

from scipy.stats import binom

observed_list = []

prob_list = []

for num_observed in range(30, 51):

observed_list.append(num_observed)

if num_observed < 0.95 * 50:

prob_list.append(binom.cdf(num_observed, 50, .95))

else:

prob_list.append(1 - binom.cdf(num_observed -1, 50, .95))

pd.DataFrame({"probs": np.array(prob_list) * 16, "observed": observed_list})

.dataframe tbody tr th {

vertical-align: top;

}

.dataframe thead th {

text-align: right;

}